Malaysian Address Normalization with Python (Quick Start)

A Sample Use Case to get Started with Malaya NLTK.

A quick start to

1 get started with Malaya NLTK.

2 get started with Malaysian address normalization using Python.

Malaya NLTK greatly simplifies Malaysian address normalization logic versus 5 years ago when I first built one.

Notes:

1 You will need to expand the images to have a better view.

2 Non-comprehensive quick start. If you are interested to know more, feel free to get connected.

##########################

# Start of Code

####################################################

# Introduction

##########################

# Getting Started with Malaya,

# a Natural-Language-Toolkit library for bahasa Malaysia,

# powered by Deep Learning Tensorflow.

# Malaya is the brainchild of Mr Husein Zolkepli

########################### Address Normalization (Standardization) forms the basis of household sizing in marketing# to facilitate analysis on household composition

# from opportunity sizing of products and services, down to category spent.# Knowing/ having inferred the age composition of each household,

# marketeers can then have a better view on the life stages of each family

# and therefore provide greater context and personalization.# Other than that, behavioural analysis such as spending patterns, lifestyle, affluence index etc

# further augment the context of the analysis

##########################

##########################

# setup

##########################

# installation guide:

# https://malaya.readthedocs.io/en/latest/Installation.html

# pip install malaya

##########################

# import libraries

%%time

import malaya

import pandas as pd

import string

import numpy as np

import re

##########################

def probability(sentence_piece: bool = False, **kwargs):

"""

Train a Probability Spell Corrector.

Parameters

----------

sentence_piece: bool, optional (default=False)

if True, reduce possible augmentation states using sentence piece.

Returns

-------

result: malaya.spell.Probability class

"""

##########################

prob_corrector = malaya.spell.probability()

symspell_corrector = malaya.spell.symspell()

corrector = malaya.spell.probability()

normalizer = malaya.normalize.normalizer(corrector)

####################################################

# Sample Data

##########################

# sample data

# source: https://www.bestrandoms.com/random-address-in-my

# for simplicity, address is split by Address1, Postcode and State

# we will only show normalization example for Address1 and State

##########################

df = pd.DataFrame({

'ID':[

'abc123',

'bcd234',

'cde345',

'def456',

'efg567',

'fgh678',

'ghi789',

'hij890',

'ijk901',

'jkl012',

'klm123'

],

'Address1':[

'5, Jalan 2/2, Taman Bunga',

'2, Jln 2, Kampng Baru',

'No 3, Jalan Dua, Tmn Perdana',

'NO 18-1, Jalan 13/5 Taman Melati Gombak',

'29E Jln Tandang Seksyen 51 Petaling Jaya',

'Lorong Hulu Balang 30B, Taman Sentosa,',

'73 Jln Yam Tuan Seremban',

'Menara CITIBANK, Jln Ampang, Kul',

'57-B Jalan Ss22/19 Damansara Jaya',

'87, Lebuh Macallum, George Town, 10300',

'Lrg Panggung, City Centre, 50000'

],

'Postcode':[

'88811',

'88800',

'58700',

'53100',

'46050',

'42100',

'70000',

'50450',

'47400',

'10300',

'50000'

],

'State':[

'KL',

'KUALA LUMPUR',

'KL.',

'Wilayah Persekutuan KL',

'Selangor',

'Selngor',

'Negeri Sembilan',

'Kuala Lumpur',

'Selangor',

'Pulau Pinang',

'KL'

]

})

####################################################

# Abbreviation dictionary

##########################

# sample shortform/abbreviation dictionary

# this helps us to immediately convert common short hands

# in actual, you will need more, eg those for tingkat, medan etcshortform_dict = {

'tmn': 'taman',

'kg': 'kampung',

'jln': 'jalan',

'jln.': 'jalan',

'lrg': 'lorong',

'st': 'street'

}

##########################

# functions to be used

##########################

# remove punctuations

def remove_punctuations(text):

for punctuation in string.punctuation:

text = text.replace(punctuation, '').lower()

return text# change to lowercase

def apply_lowercase(text):

text = text.lower()

return text# endcomma stripping

def remove_endcomma(text):

try:

return text.rstrip(',')

except:

return text# Malaya normalizer

def applymalaya_normalizer(cell):

try:

return list(normalizer.normalize(cell).items())[0][1]

except:

return cell# Malaya Spellcorrector. we opt for the simplest spell corrector

def applymalaya_symspell_corrector(cell):

try:

return symspell_corrector.correct(cell)

except:

return cell# Malaya word2num

def applymalaya_word2num(cell):

try:

return malaya.word2num.word2num(cell)

except:

return cell

##########################

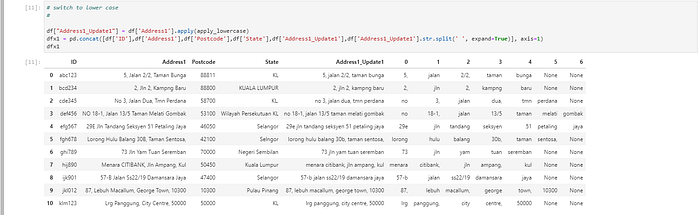

# switch to lower case, then split with space as delimiter

# '2, Jln 2, Kampng Baru' --> '2, jln 2, kampng baru'

# Kampng --> kampng

# '2, jln 2, kampng baru' --> '2,' + 'jln' + '2,' + 'kampng' + 'baru'df["Address1_Update1"] = df['Address1'].apply(apply_lowercase)

dfx1 = pd.concat([df['ID'],df['Address1'],df['Postcode'],df['State'],df['Address1_Update1'],df['Address1_Update1'].str.split(' ', expand=True)], axis=1)

dfx1

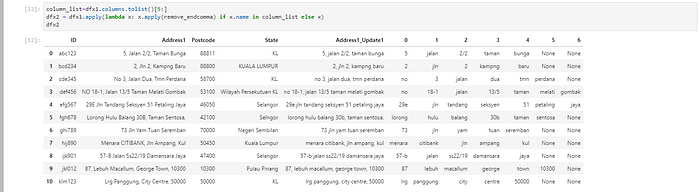

# strip the comma at the end

# for example, the comma at the end of jalan 2/2 will be strippedcolumn_list=dfx1.columns.tolist()[5:]

dfx2 = dfx1.apply(lambda x: x.apply(remove_endcomma) if x.name in column_list else x)

dfx2

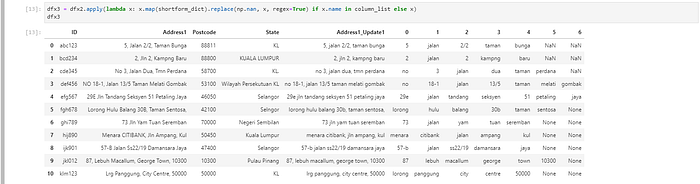

# applying abbreviations mappings

# for example 'jln' --> 'jalan'

# applying these visible ones, even though Malaya NLTK is capable of thisdfx3 = dfx2.apply(lambda x: x.map(shortform_dict).replace(np.nan, x, regex=True) if x.name in column_list else x)

dfx3

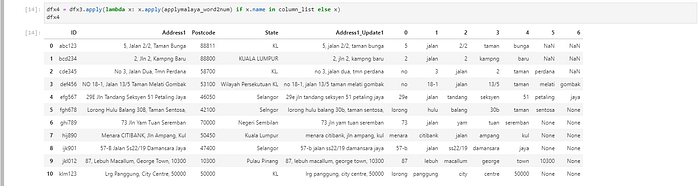

# word2num is applied to facilitate geocoding exercises (not covered here)

# 'Jalan Dua' --> 'Jalan 2'dfx4 = dfx3.apply(lambda x: x.apply(applymalaya_word2num) if x.name in column_list else x)

dfx4

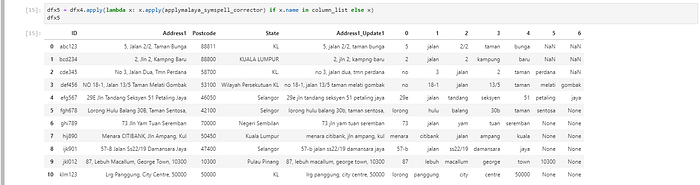

# spell corrector

# 'kampng' --> 'kampung'

# luckily 'kampng' --> 'kmpg' did not materialize HAHAdfx5 = dfx4.apply(lambda x: x.apply(applymalaya_symspell_corrector) if x.name in column_list else x)

dfx5

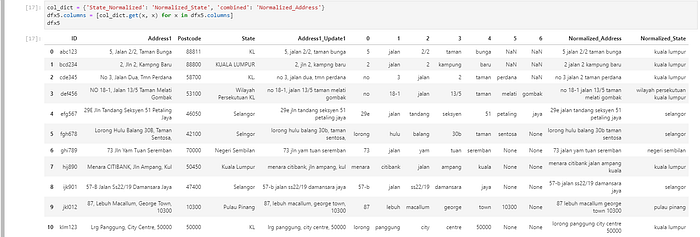

# random exercise to normalize state

# you can opt for a dictionary to streamline this further

# for example, 'wilayah persekutuan kuala lumpur' --> 'kuala lumpur'dfx5['combined'] = dfx5[column_list].apply(lambda row: ' '.join(row.values.astype(str)), axis=1).str.replace('nan','').str.replace('None','')

dfx5["State_Normalized"]=dfx5["State"].apply(remove_punctuations).apply(applymalaya_normalizer).str.lower().apply(applymalaya_symspell_corrector)

dfx5

col_dict = {'State_Normalized': 'Normalized_State', 'combined': 'Normalized_Address'}

dfx5.columns = [col_dict.get(x, x) for x in dfx5.columns]

dfx5

# quick comparison of pre-normalized versus normalized addressdfx5[["ID", "Address1","Postcode","State","Normalized_Address","Normalized_State"]]

dfx5['Pre_Normalized_Address'] = dfx5['Address1'] + ' ' + dfx5['Postcode'] + ' ' + dfx5['State']

dfx5['Normalized_Address'] = dfx5['Normalized_Address'] + ' ' + dfx5['Postcode'] + ' ' + dfx5['Normalized_State']

from IPython.display import display

display(dfx5[["ID", "Pre_Normalized_Address","Normalized_Address"]].style.set_properties(**{

'width': '500px',

'max-width': '500px'

}))

##########################

# End of Code

##########################

References:

https://malaya.readthedocs.io/en/latest/

https://www.bestrandoms.com/random-address-in-my

https://www.google.com.my/maps for the image

Mr Husein Zolkepli is the brainchild of Malaya NLTK library. Do support Malaya and Malay-Dataset development on BuyMeACoffee: https://www.buymeacoffee.com/huseinzolkepli